Last mile for web search

The last few decades have been dominated by a certain search paradigm - the user enters keywords into a search box and gets back a number of links as the result. The search engine did all the hard work to sift through the enormous pool of the information that is on the web, but left the last mile to the user - the job of filtering the links and the ads to a few relevant ones, opening them and then taking the time to consume the content and deduct the actual information needed.

While the current search paradigm is still appropriate for some tasks, there are growing numbers of situations in our increasingly busy and changing lives that require a new paradigm - returning a short, to-the-point answer to our questions. These questions come in increasingly complex semantic forms as the users get more comfortable with using natural language for their queries. This also creates problems for the current approaches which often struggle to understand the question, let alone provide a relevant answer.

With Kagi.ai, we have built a platform to provide direct, straight to-the-point answer to any type of questions. In the future, we expect the machine will be able to answer even questions wthat would require it to digest pages of documents, even entire books or videos, and come up with a summary in a given budget of words or sentences.

The Present

"Sorry, I didn't quite get that" is the primary source of frustration with users when using AI assistants today. When the users do not get at least an attempt at an answer, they are left without a path to proceed and start to gradually lose interest in the platform. This leads to the effect where the engagement with AI assistants converges to weather, time and music - definitely not what the creators of these platforms envisioned! This in turn causes the AI assistant adoption to suffer.

We believe that the ability to answer any kind of general questions, including open-ended, is table stakes for any AI assistant or search engine in the future. This is the crucial ingredient to keeping the engagement levels up.

In order to better asses the current situation we designed a test to measure the factual question-answering capabilities of all AI assistants on the market today, including Google, Siri and Alexa. The test consists of randomly sampled 100 natural language factual questions sourced from relevant public research data sets. To score correctly, the AI must provide a direct, non-ambiguous answer to the test question.

We present the results below.

Current AI factual question-answering accuracy

(higher is better; last updated December 2019)

Apple is most work in understanding and communicating emotions and is probably leading the pack with social-context-aware communication. But this focus, while important, causes Siri to trail the pack by a large margin with its general question answering abilities. Many users share the same sentiment and find Siri next to useless at information retrieval, using it mostly for simple command-like tasks.

Alexa invested heavilly into its voice-driven platofrm and is detailed when the answer is in its knowledge base. Unfortunately that does not happen for long tail queries. The users are also starting to get lost in the skills jungle while the engagement is converging towards just a few utilties such as weather, music and time. We all expected to get a C-3PO in our rooms only to get the dreaded "Hm I do not know that" for anything out of the ordinary that we ask.

Samsung is trying hard to catch up, but Bixby has mediocre accuracy and still lacks the name recognition in the AI assistant world. Microsoft had a good start with Cortana but recently decided to change the roadmap, moving away from the consumer market. Hound is a relatively new Bay Area startup and we include their results as reference.

Not surprisingly, Google does best among peers (excluding Kagi that is) in our test. However worth noting is the burden of scrutiny it is receiving over its business practices, well summarized here:

The idea that we get our information as citizens through algorithms determined by the world’s largest advertising company is my definition of dystopia.

— ian bremmer (@ianbremmer) February 22, 2018

There is also a fundamental business model question on the horizon - even if Google is able to create a perfect AI that can answer any question you have, who would be left to click on ads (which are still over 80% of its revenue)? That means that the burden of innovation will likely be on someone else.

Introducing Kagi

Do you have wise friends/colleagues that can clearly convey something in a few sentences? We all do. Being that wise friend that you can always rely on for clear and concise answer is our goal with Kagi.

You can see some of this in action if you tried our technology demo. Now, imagine for a moment that you could break down each of those answers and sentences and then explore them individually, unrolling new ideas and concepts? It is not just about how we receive the information but also how we use it to broaden our horizons.

We want to establish the connection between the human and a machine at the magic and emotive level where the HCI interaction happens. We are thinking deeply what the essence of human and machine dialogue should be.

At Kagi, we believe that combination of the following two factors will effectively remove the barrier for AI assistant adoption and enable a new search paradigm to emerge:

Let's deconstruct this.

With Kagi we've basically eliminated the 'Sorry, I didn't quite get that' responses. Our novel approach allows us to take advantage of the entirety of available human knowledge. This is in contrast to using methods like scripted responses or knowledge graphs, which by design have a horizon of nature and types of questions they are able to handle. It also makes them hard to scale and when they do, they threaten to collapse under the weight of their own complexity.

With our approach, AI's input repertoire is unlimited. That means Kagi.ai will always attempt to answer any question asked by the user. Clearly, Kagi is not always on the mark, but it is rapidly improving and also using user input (when you mark the answer as good/bad) to learn. We also believe that this approach will ultimately lead to better outcomes in terms of user experience and engagement in the long term. We know that as humans, we are much more likely to accept toys and robots that we know are imperfect, even building a relationship with their 'character'. On the other hand, AIs that try to be perfect set a high bar for expectations and almost always proceed to disappoint. Instead, we want to be able to gradually build trust and connection, knowing that the machine will get better over time.

There are vast amounts of information currently available on the web and Kagi is attempting to make sense of all that. It acts as gateway to the giant repository of human knowledge, always happily waiting to serve our requests. We have set the goal of 90% answering accuracy as we believe this is the tipping point where engagement is unleashed. We are not quite there yet (our current accuracy will be revealed in a minute) but there is a visible road ahead.

You may ask why not shoot for 100%? It turns out there are certain limitations in the way we as humans interact and process information, that makes it impossible to always get the answer that we expect or simply there is no clear answer. Let's give an example that we encountered in the early days of working on Kagi.ai.

We were training Kagi to answer yes/no questions and one that we asked was "Do cats have wings?". To our surprise it responded "Yes", and when we debugged why, it turns out that under certain circumstances cats can grow something resembling wings (it is documented online) and people were calling it "wings". So we rephrased the question to better match our original intention and asked "Can cats fly?" and it again responded "Yes"! This time we were sure it was a mistake, but when we debugged it, it turns out that cats can 'fly' - if you buy them a plane ticket... This would make a good joke in human company, let alone in AI's.

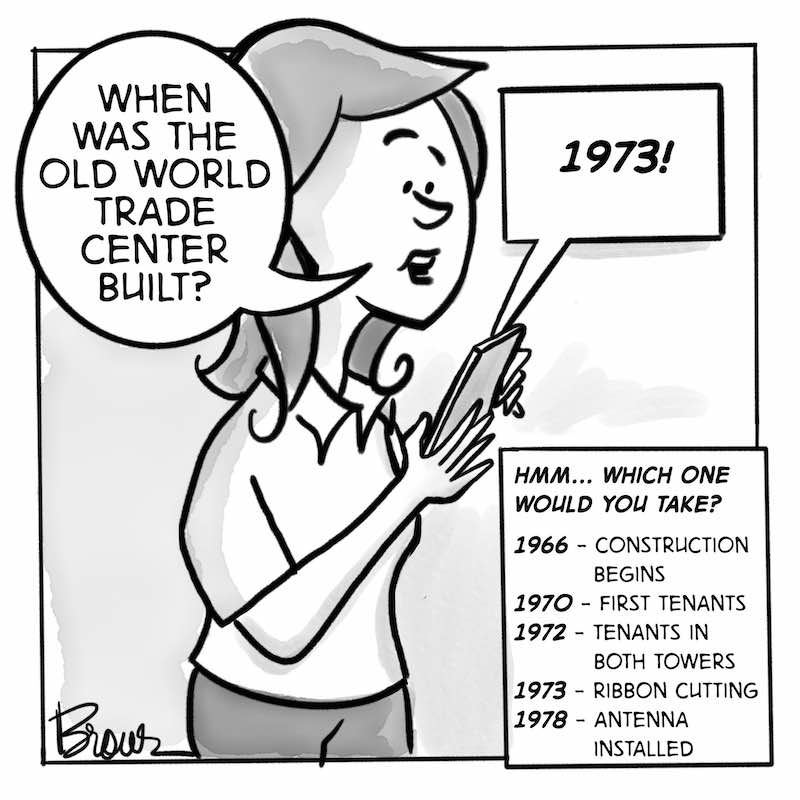

Sometimes the question is not clear enough. Take the following example - when were the Twin towers built?

These make for interesting challenges and it is going to boil down to the human computer interaction design as we discussed before.

Kagi and the new paradigm of AI

As discussed, our approach represents a paradigm where AI's scope of the possible inputs is virtually unlimited, bringing the focus to improving the quality and accuracy of outputs. We could say that it is a top-down approach. This is in contrast to a bottoms-up approach largely in use today, where the user is forced to memorize commands and types of interactions and questions with AI that are allowed.

To show the superiority of this approach we are excited to put our current results into perspective.

Current AI factual question-answering accuracy

(higher is better; last updated December 2019)

We are proud to state that Kagi currently achieves state-of-the-art results in open domain question answering. When we built the first prototype in October 2018 we were at "only" 36% accuracy (still better than most AI assitants today) and have continually managed to improve the quality and accuracy of the answers going beyond any AI on the market.

Your Future AI

Lets take a moment and envision a future where AI assistants might be graded by their ability, and as a result potentially come at different price points. You can imagine being able to purchase a beginner, advanced and an expert level AI. They will come with character traits, different tact, charm and wit, certain 'pedigree' and 'interests' and have controllable bias - like for example you will be able to choose between an AI with a conservative or liberal (or agnostic!) view of the world.

In this future, instead of everyone sharing the same Siri, we will own our truly own Mike or Julia, or maybe Donald - the AI. And when you ask your own AI a question like "does God exist?" it will answer it relying on biases you preconfigured. When you ask it to recommend a good restaurant nearby, it will do so knowing what kind of food you like to eat. The same will happen when you ask it to recommend a good coffee maker - it will know the brands you like, your likely budget and the kind of coffee you usually drink. All this information will be volunteered to the AI by you - similar to how you would volunteer your information to a human assistant - but this time to a much larger extent. And you will also do it without fear as the business models will change away from ad-driven ones. This will make AI assistants indispensable in our future even busier lives.

We invite you to play with Kagi and have some fun asking it weird questions you would never ask Siri or Alexa. Also if you like what we are building, please share it with your friends. Thank you.