Sunday, December 1, 2019 By Vladimir Prelovac

Everyone by now knows how search works – you provide keywords and the search engine provides relevant links. Then you go the last mile to check the links and hope you find the information you wanted.

While this process works for some tasks, our increasingly busy lives demand a new process – direct answers to our questions. And as technology improves and users get more comfortable with it, our questions become more complex and use natural language instead of a few keywords. But current approaches often struggle to understand the question, let alone provide a relevant and accurate answer.

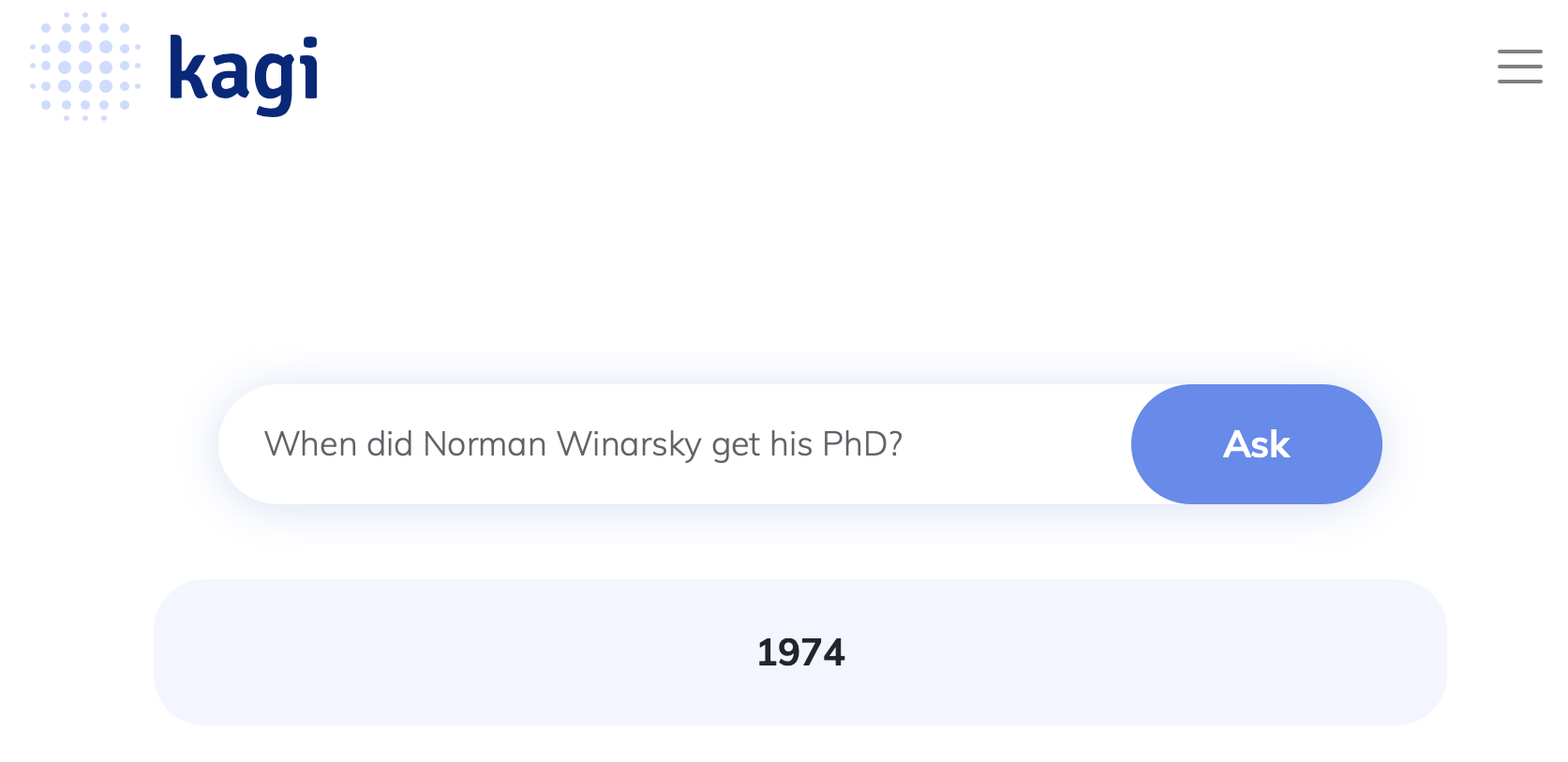

For example, what happens when you ask Siri, "When did Norman Winarsky get his PhD?" Dr. Winarsky helped create Siri, so she has to know the answer, right? Sadly, it seems that Siri doesn’t know.

She expects you to spend the next few minutes scanning the links, opening one that looks promising, and scanning the opened page to find out. Come on, Siri.

This less-than-ideal user experience makes Siri less than popular. We know the correct answer exists out there in simple, findable language, yet somehow Siri – and all the other assistants for that matter (Alexa, Cortana, Bixby, etc.) – failed to retrieve it.

Kagi can fill this gap.

An end-to-end platform that provides direct answers to all kinds of questions, Kagi has abilities that go beyond any AI assistant in today’s market.

"Sorry, I didn't quite get that" is a huge source of user frustration with AI assistants. When you don’t get even an attempt at an answer, how much more effort do you want to spend on the question and the platform itself? Engagement with frustrating AIs gradually grinds down to weather, time, and music – definitely not what the creators of these platforms envisioned!

To maintain engagement, AI assistants must be able to answer any kind of general questions, regardless of the language used.

We tested the factual answering abilities of all AI assistants on the market today. To score well, the AIs must provide direct, clear answers to the test questions.

(higher is better; last updated December 2019)

Google Assistant

Google Assistant

Microsoft Cortana

Microsoft Cortana

SoundHound Hound

SoundHound Hound

Amazon Alexa

Amazon Alexa

Samsung Bixby

Samsung Bixby

Apple Siri

Apple Siri

Apple as a company understands and communicates well with emotions, giving Siri an edge in focusing on social context. But this focus, while important, causes Siri to trail the pack in factual answering abilities. Users who find Siri nearly useless at information retrieval have reduced their engagement with it to commands for simple tasks.

Amazon invested heavily in its voice-driven platform, so Alexa can provide many detailed answers from its knowledge base, although its performance suffers on long-tail queries. The hype had us expecting C-3PO in our rooms, only to get the dreaded "Hmm, I do not know that" for anything out of the ordinary that we ask. And Alexa’s jungle of skills can frustrate users, whose engagement eventually converges on the basics of weather, music, and time.

Samsung is trying hard to catch up, but Bixby has mediocre accuracy and still lacks name recognition among AI assistants. Microsoft had a good start with Cortana but recently decided to change the roadmap, moving away from the consumer market. SoundHound is a Bay Area startup we included as reference.

Not surprisingly, Google performs the best among the assistants in this test. However, the scrutiny brought to bear on its business practices damages its credibility, as noted in this tweet:

The idea that we get our information as citizens through algorithms determined by the world’s largest advertising company is my definition of dystopia.

— ian bremmer (@ianbremmer) February 22, 2018

And if Google created a perfect AI to answer any question, where would Google put the ads (80+% of its revenue)? Yeah, innovation will likely happen somewhere else.

You know that one friend or colleague who can clearly convey an idea in just a few words? We all know somebody like that. We want Kagi to be that fountain of clear knowledge for you.

Try our demo to see how it works. We want to connect the human and the machine at the emotional and instinctual level, and to do that, we’re deeply investigating the essence of human/machine dialogue. At Kagi.ai, we believe that combining two factors will increase engagement with AI assistants and develop a new search standard.

Scripted responses and knowledge graphs (used by most contemporary assistants) are limited by the types of questions they can handle, making them difficult to scale. With Kagi, we eliminated the “Sorry, I didn't quite get that” response. Our approach allows us to take advantage of the entirety of available human knowledge.

Kagi will always attempt to answer any question asked. Clearly, Kagi will still miss the mark sometimes, but it improves with every point of feedback it gets. This approach will lead to better user experiences and more long-term engagement.

As humans, we tend to accept toys and robots that we know are imperfect, even building relationships with their “characters.” On the other hand, AIs that try to be perfect set a high bar for expectations and almost always disappoint. Here at Kagi.ai, we want to gradually build trust and connection by making it clear that the machine will improve over time.

Kagi acts as a gateway to the giant repository of human knowledge, patiently waiting to serve our needs. We set the goal of 90% answering accuracy as the tipping point where true engagement begins. We have yet to achieve this goal (more on that in a minute) but can see it on the road ahead.

So why not shoot for 100%? Because we’re human. It turns out that the ways in which humans interact and process information makes it impossible to always get the answer we want. And sometimes a clear answer simply doesn’t exist. Let's review an example encountered in Kagi’s early days.

In training Kagi to answer yes/no questions, we asked, "Do cats have wings?" To our surprise, it said yes! It turns out that under certain circumstances cats can grow appendages that people would call wings. Not what we meant, but fair enough.

So then we asked, "Can cats fly?" Kagi said yes – if you buy them plane tickets. While it may make for a funny joke from a human, it’s a little frustrating from an AI.

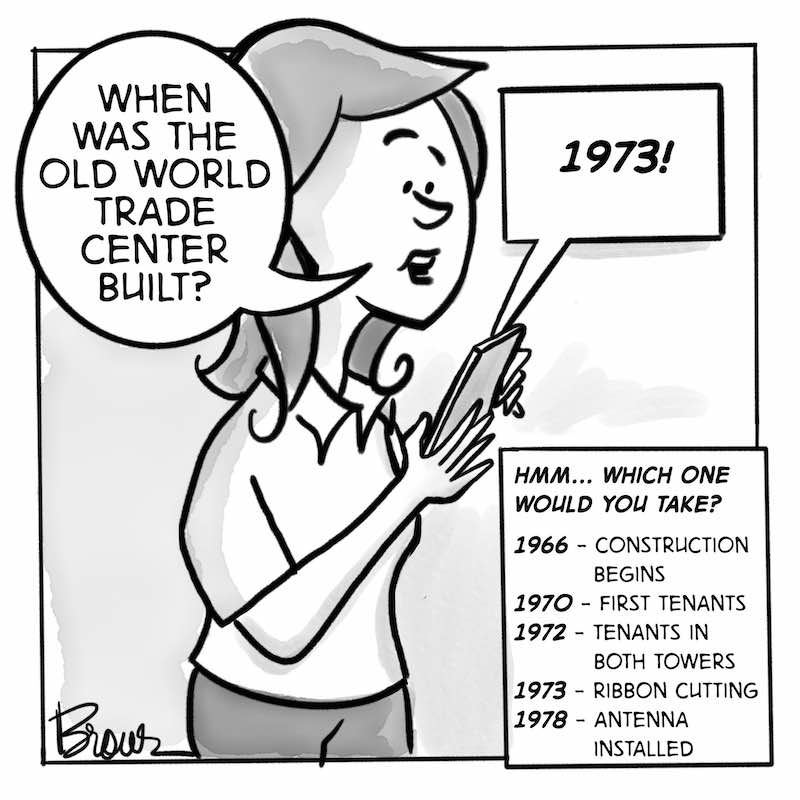

Sometimes the question is not specific enough. Take the following example - when were the Twin Towers built?

These challenges all boil down to human-computer interaction (HCI) design.

With Kagi, we want to break free of the bottom-up approach, where users have to restrict their interactions to the limited inputs that an AI can understand. We’re creating a model with a virtually unlimited scope for inputs, allowing the AI to focus on the quality and accuracy of outputs.

To show the superiority of our approach, we added our current results to the table you saw earlier.

(higher is better; last updated December 2019)

Kagi.AI

Kagi.AI

Google Assistant

Google Assistant

Microsoft Cortana

Microsoft Cortana

SoundHound Hound

SoundHound Hound

Amazon Alexa

Amazon Alexa

Samsung Bixby

Samsung Bixby

Apple Siri

Apple Siri

Kagi currently achieves state-of-the-art results in open-domain querying and continues to set the standard on the accuracy ladder. Even our first prototype in October 2018 achieved better answer relevancy than Alexa, Bixby, and Siri can achieve today.

Obviously, we can use Kagi's unstructured knowledge in intelligent assistants, home robots, mobile apps, games, and such, both alone and in addition to existing QA capabilities. But let’s take a look at a few less-obvious scenarios where Kagi can help.

My own kids (7, 4, and 2) enjoy playing with new technology like Alexa. But they quickly lose interest when most of their questions get the answer even adults hate: “Hmm, I don't know that." In contrast, their first session with Kagi's AI app (a voice-activated mobile app we’re still working on) lasted nearly 45 minutes. They would ask, “Do you like tiramisu or carrots?” then move on to “Why do I have to eat vegetables?” all the way to “What happens if a pigeon poops on Dad's head?” (Yes, they actually asked that.) Kagi reliably delivered answers they found enlightening and entertaining, especially when the questions revolved around Dad in funny situations.

In a similar fashion, Kagi is usually the most engaging assistant at dinner parties when I demo it. “What are the chances of Jake going on a date with Amanda?” is not something Siri or Google can handle out of the blue. Kagi excels at open-ended, casual questions and keeps the conversation going with fun and relevant answers.

You can also use Kagi for quick access to obscure or long-tail information. Learning about the interests and projects your contacts have helps you build better connections with them. So on the way to a business meeting recently, Kagi pulled information from blog posts and interviews to teach me more about the person I was going to meet.

Or you can use Kagi to expand your search instead of narrowing it. When I wanted to find out how much ski schools in the area generally cost, Kagi gave me an (unexpected) answer which allowed me to explore further into the topic. Compare that to asking Google, where you get hit by a wall of ads and links that offer few specifics and even less hope. No thanks.

And getting quick, no-frills restaurant recommendations from Kagi makes eating out easy. One question gets one answer, cutting out all the analysis paralysis and indecision that often occurs over a choice that has no “right” answer anyway. Just ask and go. Bon appetit!

Imagine a world where the market grades AI assistants by their ability and offers them at different price points. Depending on your budget and tolerance, you can buy beginner, intermediate, or expert AIs. They’ll come with character traits like tact and wit, or certain pedigrees, interests, and adjustable bias. You could customize an AI to be conservative or liberal, sweet or sassy!

And imagine an AI that can answer a question that requires it to digest pages of documents, even entire books or hours of videos, to come up with a 200-word summary.

In this future, instead of everyone sharing the same Siri, you’ll have your completely individual Mike or Julia or Jarvis - the AI. The more you tell your assistant, the better it can help you, so when you ask it to recommend a good restaurant nearby, it’ll provide options based on what you like to eat and how far you want to drive. Ask it for a good coffee maker, and it’ll recommend choices within your budget from your favorite brands.

We invite you to play with Kagi. Go ahead and ask it all those questions that you always wanted to ask Siri or Alexa, but could not get a reply to. Invite your friends to play along. And if you like our idea, please share it on your social media. Thank you.